Thus far I have employed what I’ve described as a “puritanical” approach to matching run estimator, denominator, and win conversion for each of the three frameworks for evaluating an individual’s offensive performance. While I think my logic for this approach is sound, I do not think it is necessarily wrong to mix components in a different manner than I’ve described. This will be a brief discussion of which of these potential hybrids make more sense than others, and a few issues to keep in mind if you choose to do so.

For the player as a team framework, there are many places in the process in which a batter’s value (at least to the extent that we can define and model it) as a member of a real team is distorted. At the very beginning of the process, a dynamic run estimator is applied directly to individual statistics. This creates distortion. Then the rate stat is runs/out; this doesn’t create a tremendous amount of distortion, as runs/out is a defensible if not perfect choice for an individual rate stat even if you don’t use the player as a team framework. Then if we convert to win value, we create a tremendous amount of distortion by essentially multiplying the run distortion by nine – instead of just mis-estimating an individual’s run contribution, we compound the problem by assuming that the entire team hitting like him would shape the run environment to be something radically different from the league average.

This is an opportune juncture to make a point that I should have made earlier in the series – for league average hitters, the distortion will be very small, and the differences across all of the various methodologies we’ve discussed will be lesser. I have focused in this series on a small group of hitters, basically the five most productive and five least productive in each league-season. These are of course the hitters most impacted by different methodological decisions. A league average hitter would by definition have Base Runs = LW_RC = TT_BsR (at least as we’ve linked these formulas in this series), would by definition have 0 RAA or 0 WAA, would by definition have a 100 relative rate, regardless of which approach you take. This series, and more generally my sabermetric interests, tend to focus on the extreme cases, and evaluating methods with an eye to applicability to a wide range of performance levels. Additionally, extreme players are the ones people in general tend to care the most about – you can imagine people debating who had the better offensive season, Frank Robinson in 1966 or Frank Thomas in 1994. I cannot imagine many people doing the same for Earl Battey and Gary Gaetti.

While we could avoid compounding the issues in the player as a team approach, but why bother? The framework is inferior to linear weights or theoretical team in every possible way, except one could argue ease of calculation gives it an advantage over TT. The only value to be had in evaluating a player as a team is a theoretical exercise, and if you lose commitment to that theoretical exercise it ceases to have any value at all.

For the theoretical team framework, you could just calculate TT_BsR, and then treat it in the same way as linear weights, or calculate TT_BsRP and treat it in the same way as R+. However, it would be pretty silly to go through the additional effort needed to calculate the TT run estimates instead of their linear weight analogues only to use them in the same way. Unlike in the case of player as team, there is no argument to be made here that you would be making the results more reasonable by doing so, as the TT estimates can be combined with team-appropriate rate stat denominators and team-appropriate conversions from runs to wins. Here the result of mixing frameworks would be extra work coupled with less pure results.

The only mixture of frameworks that makes sense, then, is to mix the run estimation components of the linear weights framework with the rate stat and win conversion components of the theoretical team framework. A logical path that might defend this approach would be: It is questionable whether the valuation of tertiary offensive contribution claimed by theoretical team approach are accurate or material. Thus our best estimate of a player’s run contribution to a theoretical team remains his linear weights RC or RAA. When it comes to win estimation, we are on much firmer ground in understanding how team runs and runs allowed translate to wins than we are in measuring individual contributions to team runs. We shouldn’t refuse to use this knowledge in the name of methodological consistency, but rather we should use the best possible estimates for each component of the framework. That means using the full Pythagenpat approach coupled with linear weights run estimates.

Convinced? I’m not, but let me walk through an example of how we could apply this hybrid approach to the Franks. We can start with our wRC estimate, which we will now use in place of TT_BsRP as “R_+” going forward for this hybrid linear-theoretical team framework. Then we can use any of our TT rate stats - I’ll show R+/O+ rather than R+/PA+ here, as I think it’s the former that might serve to make this hybrid framework an attractive option. R+/O+ allows us to express the individual and team rate on the same basis and better yet, doing so while using the most fundamental of rate stats (R/O) as that basis.

O+ remains equal to PA*(1 – LgOBA), and these relative R+/O+ figures are the same as our relative R+/PA, which makes sense – the numerators are the same, and the only difference in the denominators is multiplying PA by (1 – LgOBA). So an alternative way of expressing the relationship is:

R+/O+ = (R+/PA)/(1 – LgOBA)

I will skip some steps here, since they were all covered in the last installment – no need to convert this to a W% and then convert back to a relative adjusted R+/O+.

TT_R/O = (R+/O+)*(1/9) + LgR/O*(8/9)

TT_x= ((TT_R/O)*LgO/G + LgR/G)^.29

TT_WR = ((TT_R/O)/Lg(R/O))^TT_x

RelAdj R+/O+ = (TT_WR^(1/r) - 1)*9 + 1

It might be helpful to take a step back and look at our results for the relative adjusted metric (whether R+/PA or R+/O+) for each of the four options we’ve considered, which are:

A: linear run estimate and fixed RPG based on league average (final rate based on R+/PA)

B: linear run estimate and dynamic RPG based on player’s impact on team (final rate based on R+/PA)

C: linear run estimate and Pythagenpat theoretical team win estimate (hybrid approach discussed in this installment; final rate based on R+/O+)

D: theoretical team run estimate (full theoretical team approach; final rate based on R+/O+)

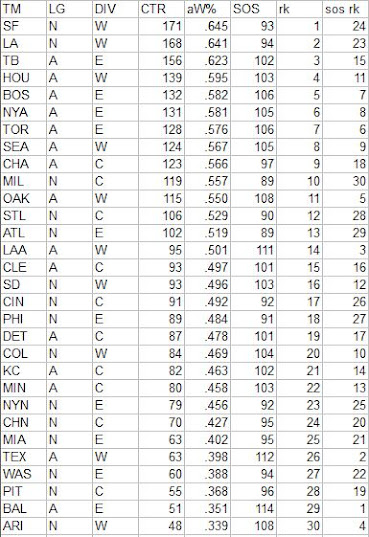

I included a third row which shows the percentage by which Thomas’ figure exceeded Robinson’s. The TT (D) approach maximizes each player’s value and also the difference between them. The hybrid approach (C) falls in between the pure linear approach (A) and the TT approach (D). One thing that I did not fully expect to see is that the linear approach that varies RPW based on the estimated RPG of a team with the player added (B) produces a lower estimate than any of the other approaches, and is the outlier of the bunch. I didn’t make enough of this in part 13, but we are actually better off assuming that the individual has no impact on RPW than adjusting RPW based on his own impact on the theoretical team’s RPG.

Note that when we translate to a reference league, we are fixing each theoretical team's RPG to the same level. In reality, the Frank Thomas TT will have a higher RPG than the Matt Walbeck TT for any given reference environment. However, this is not an issue, because the runs value we're reporting is not real. It is intended to be an equivalent run value that reflects the player's win contribution for a common frame of reference. When we use the full Pythagenpat approach, the theoretical team's winning percentage is preserved, so the runs are now an abstract quantity and do not need to tie out to any actual number of runs in this environment. In this sense, when we convert TT to a win-equivalent rate, we're doing something similar to what I said the linear weights framework was doing - we're making the run environments equal after adding the player. The difference is that in this case we are capturing the disparate impact of the players on team wins first, then restating a win ratio as an equivalent run ratio given the assumption of a stable run environment. Thus the runs are an abstraction, but the value they represent is preserved.

When we do the same with a dynamic RPW approach (i.e. when we use the Palmerian approach of adding the batter's RAA/G to the RPG and then calculating RPW), we run into difficulties because while we have fixed a WAA total, and can then translate that WAA to an equivalent for the reference environment, we have not taken into account that the batter would need to contribute more runs to actually produce the same number of wins. This is not a problem for the full Pythagenpat approach because we used a run ratio that modified the batter's abstract runs in concert with the run environment.

Now there is a way we could address this, but it results in a mess of an equation that I'm not sure has an algebraic solution (whether it does or not, I have no interest in trying to solve it). Basically, we can solve for the RAA that would be needed to preserve the batter's original WAA in the reference environment. To do this, we need to remember to apply the reference PA adjustment. For this application, I think it’s easiest to apply directly to the batter’s original WAA, so WAA*Ref(PA/G)/Lg(PA/G):

We could then say that adjWAA = X/(2*(refRPG + X/G)^.71) where X = the batter's RAA and G could be the batter's actual game or some kind of PA-equivalent games as long as we’re consistent with what was used in the original WAA calculation. I used the Goal Seek function in Excel to solve the following equations for the Franks:

for Robinson: 8.552 = X/(2*(8.83 + X/155)^.71), X = 85.555

for Thomas: 7.217 = X/(2*(8.83 + X/113)^.71), X = 71.176

With these new estimates of RAA, we can get relative adjusted R+/PA by first dividing by each batter’s PA, then adding in the reference R/PA, and then dividing by the reference R/PA:

This approach allows us to more correctly calculate a win-equivalent rate stat for the linear weights framework when allowing RPW to vary based on the batter’s impact on his team’s run environment. However, given that I don't know how to solve for x algebraically, and my pre-existing philosophical issues with using this approach with the linear weights framework to begin with, I think that at this point there are two better choices:

1. If you want to maintain methodological purity, stick with the fixed RPW for the linear weights framework like I advocate

2. Use the hybrid framework, which embraces the "real" run to win conversion and actually makes this math easier

With "B" out of the picture, what we see for A, C, and D make sense in relation to each other. C produces a lower final relativity for great hitters like the Franks because it recognizes that when they inflate their team's run environments, RPW increases. D is higher than both because the initial run estimate is higher, due to using TT_BsR rather than LW, and thus giving each batter credit for their estimated tertiary contributions. And our revised linear weight approach falls somewhere in between.

Of course, it’s possible that I’m wrong about this, and the hybrid or theoretical team approaches that make use of Pythagenpat are overstating the impact of the player on the runs to win conversion. I can’t prove that I am right about this, but I would offer two rejoinders:

1. The full Pythagenpat approach produces the same W% for the theoretical team across different environments by definition, and that is the most important number at the end of the lined if we are trying to build a win-equivalent rate stat.

2. We should expect the results from the linear and the Pythagenpat approach to be similar (which they are after making proper adjustments), as our RPW formula is consistent with Pythagenpat for .500 teams. While the relationship between the RPW formula and Pythagenpat will fray as we insert more extreme teams, the theoretical team approach doesn’t produce any extremes for even great hitters like the Franks. For example, if we use the Palmer approach, Thomas’ 77.8 RAA in 113 games takes an average 1994 AL team that scores 5.23 R/G up to 5.92 R/G, which would rank second in the league, even with the Yankees. This is not an extreme team performance when it comes to applying the win estimation formulas. If we use Pythagenpat, such a team would be expected to have a .5623 W% using the RPW formula and a .5620 W% using Pythagenpat. So we shouldn’t expect the results to diverge too much when applying the approaches to derive win-equivalent rate stats.

And with that, we have come to the end of everything that I wanted to say about rate stats. I will close out the series with one final installment that attempts to briefly summarize my main points with limited math.